Welcome to Sycamore!#

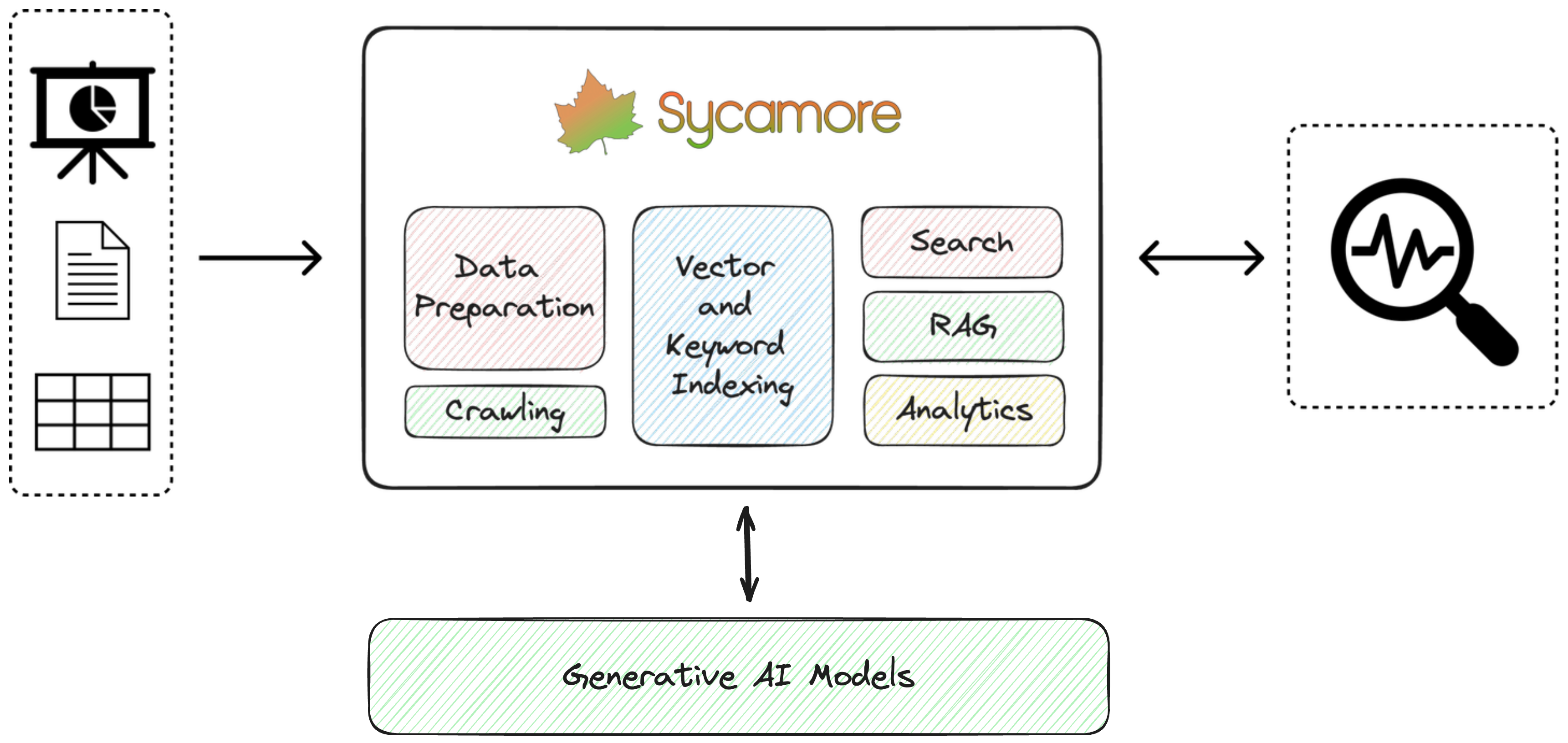

Sycamore is an open-source conversational search and analytics platform for complex unstructured data, such as documents, presentations, transcripts, embedded tables, and internal knowledge repositories. It retrieves and synthesizes high-quality answers through bringing AI to data preparation, indexing, and retrieval. Sycamore makes it easy to prepare unstructured data for search and analytics, providing a toolkit for data cleaning, information extraction, enrichment, summarization, and generation of vector embeddings that encapsulate the semantics of data. Sycamore uses your choice of generative AI models to make these operations simple and effective, and it enables quick experimentation and iteration. Additionally, Sycamore uses OpenSearch for storage and queries of vector embeddings and associated data.

Key Features

Answer Hard Questions on Complex Data. Prepares and enriches complex unstructured data for search and analytics through advanced data segmentation, LLM-powered UDFs for data enrichment, performant data manipulation with Python, and vector embeddings using a variety of AI models.

Multiple Query Options. Flexible query operations over unstructured data including RAG, hybrid search, analytical functions, natural language/conversational search, and custom post-processing functions.

Secure and Scalable. Sycamore leverages OpenSearch, an open-source enterprise-scale search and analytics engine for indexing, enabling hybrid (vector + keyword) search, analytical functions, conversational memory, and more. Also, it offers features like fine-grained access control. OpenSearch is used by thousands of enterprise customers for mission-critical workloads.

Develop Quickly. Helpful features like automatic data crawlers (Amazon S3 and HTTP) and Jupyter notebook support to create, iterate, and test custom data preparation code.

Plug-and-Play LLMs. Use different LLMs for entity extraction, vector embedding, RAG, and post-processing steps. Currently supporting OpenAI and Amazon Bedrock, and more to come!

Getting Started#

You can easily deploy Sycamore locally or on a virtual machine using Docker.

With Docker installed:

Clone the Sycamore repo:

git clone https://github.com/aryn-ai/sycamore

Set OpenAI Key:

export OPENAI_API_KEY=YOUR-KEY

Go to:

/sycamore

Launch Sycamore. Containers will be pulled from DockerHub:

docker compose up --pull=always

The Sycamore demo query UI is located at:

http://localhost:3000/

You can next choose to run a demo that prepares and ingests data from the Sort Benchmark website, crawl data from a public website, or :doc: write your own data preparation script </data_ingestion_and_preparation/using_jupyter>.

For more info about Sycamore’s data ingestion and preparation feature set, visit the Sycamore documentation.

Run a demo#

Load demo dataset using the HTTP crawler as shown in this tutorial:

docker compose run crawl_sort_benchmark

Load website data via HTTP crawler as shown in this tutorial:

docker compose run crawl_http http://my.website.example.com

Write custom data ingestion and preparation code using the Jupyter container. Access it via the URL from:

docker compose logs jupyter | grep Visit

Once you’ve loaded data, you can run conversational search on your data with the Sycamore demo query UI at localhost:3000

For more details about getting started, visit the Sycamore Getting Started page.

More Resources#

Join the Sycamore Slack workspace: https://join.slack.com/t/sycamore-ulj8912/shared_invite/zt-23sv0yhgy-MywV5dkVQ~F98Aoejo48Jg

View the Sycamore GitHub: https://github.com/aryn-ai/sycamore